Attaching a second VNIC card to compute in OCI

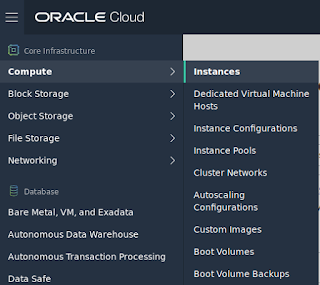

Well, just under 30 days ago, Oracle announced a series of resources you can use in OCI for free. One thing that had stopped me signing up and trying out OCI in the past was that I wanted to make the best use of the free credits, and knowing I wouldn't get a full chance to try things out in the 30 days, I didn't want to sign up prematurely. Now that they offer some free resources, this prompted me to sign up. I am now at the end of the 30-day period where I have some credits to use non-free resources. One final thing I wanted to try out was attaching multiple VNIC (Virtual network interface card) to a single compute instance. One use-case of these is that you may want a machine accessible in 2 different networks. It's not just a matter of attaching it in the OCI console - to bring the interface up you have to perform a couple of extra steps. When I was first trying this, I didn't read the docs and figured I would just have to edit the interface config script and bri