Setting up a simple web server on OCI

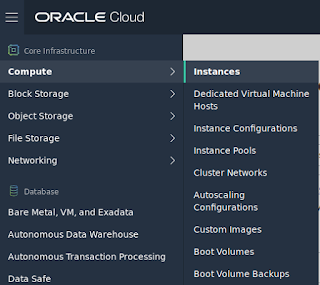

Did you miss the news? Oracle has announced that a free tier for usage of OCI which includes 2 compute instances, 2 autonomous databases, among a set of other free resources up to a certain limit. This tier isn't going to be suitable for high performance workloads, but hey, it's a pretty good deal I think. if you've been following my activity, you will notice I've been starting to do a bit more with OCI, and for me, what better time to have an actual play around. In this post, starting from a completely clean slate (no Virtual networks, no compute instances, etc), I wanted to see how I go about setting up an accessible web server. I opted to try with Ubuntu, since that is my daily driver so I'll just be consistent. So, head over to the console and navigate to the compute section: Once there, click the Create Instance button. You will see that it has by default selected Oracle Linux. So, let's see what else is available by clicking the Change Image So...